Twitter's Face Recognition has Issues

Sometimes people on Twitter post large images, and when they’re displayed, it’s hard to see what the point of the image is. Clearly, the user can include a smaller cropped image to get to the point, but the user can do many things, and doesn’t because it’s a pain.

So Twitter has introduced autocropping. At least, this is what I assume to be their reasoning. Looking at it this way, it makes sense.

And there are libraries out there that pick out faces. I’ve played a little with OpenCV, which is an older technique, created before the ML boom we’re in right now. So that must be a no-brainer, right?

Well, there are issues, like the cropping consistently choosing white faces over black faces, no matter what else is going on.

I suspect I know the reason.

It isn’t intended to choose Mitch McConnell over Barack Obama, every time like clockwork.

A lack of intention is part of the problem. And we’ve seen it before.

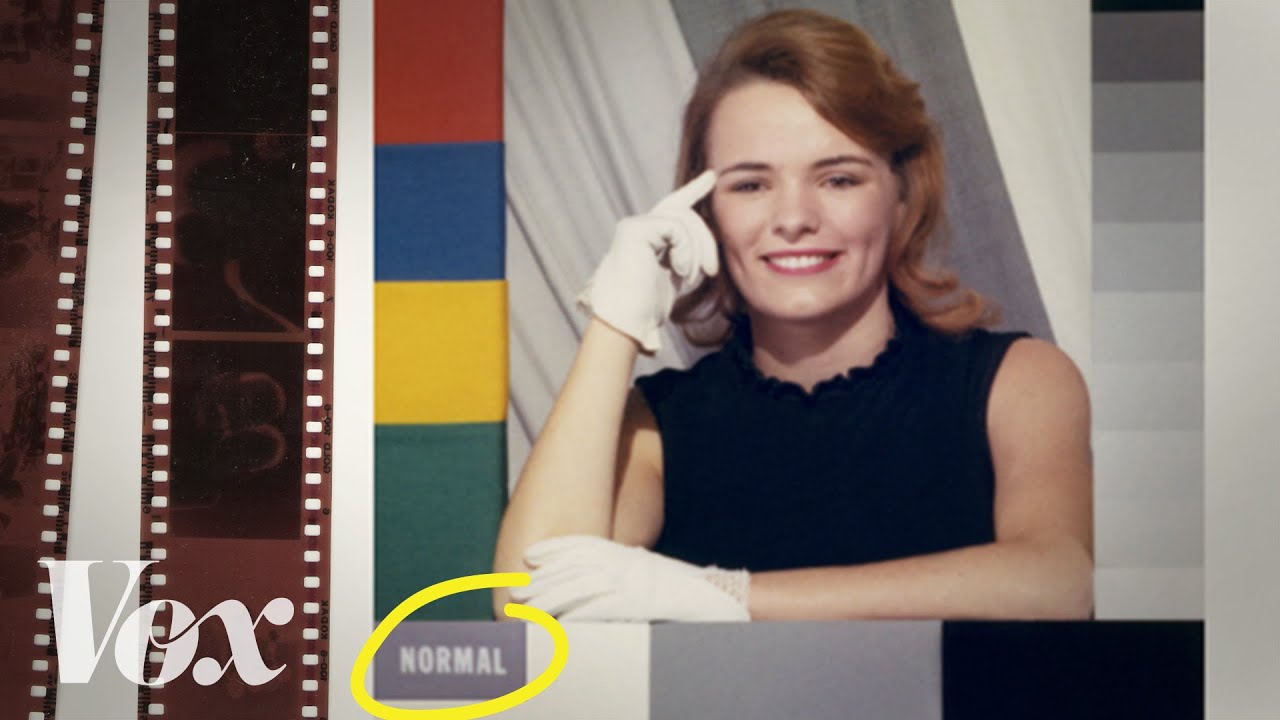

Vox: “Color Film Was Built For White People”

In short, the testing they did was with white faces, so they got good with white faces, which they tested with white faces, and so on. It wasn’t seen as an issue until powerful parts of the photography market — furniture and chocolate companies whose products were also brown — encouraged a change, after which they used the better colors as marketing.

And the same issue goes with sinks and soap dispensers.

I mean, I don’t believe that they start out thinking that it’s good to exclude large chunks of the population, but that they didn’t think it through, and didn’t think it would hurt anything.

But you’d think that, after a while, they’d realize how bad a look this is.